Domain Routing: the AI developer's superpower

Since OpenAI released ChatGPT more than two years ago, Artificial Intelligence has advanced at an accelerating pace in capability, complexity, and quality. Equally dizzying is the number of options to harness AI–thousands of open-source LLaMA models plus powerful closed models from Anthropic, google, and OpenAI.

Many teams pick a single “best” model at first, then periodically re-evaluate as requirements change. The savviest companies and developers make use of multiple models for various use cases, suggesting the best AI would be a combination of any number of AIs. You just need a system to quickly determine which AI to consult at any given moment. This is the underlying principle of Mixture-of-Expert (MoE) large language models.

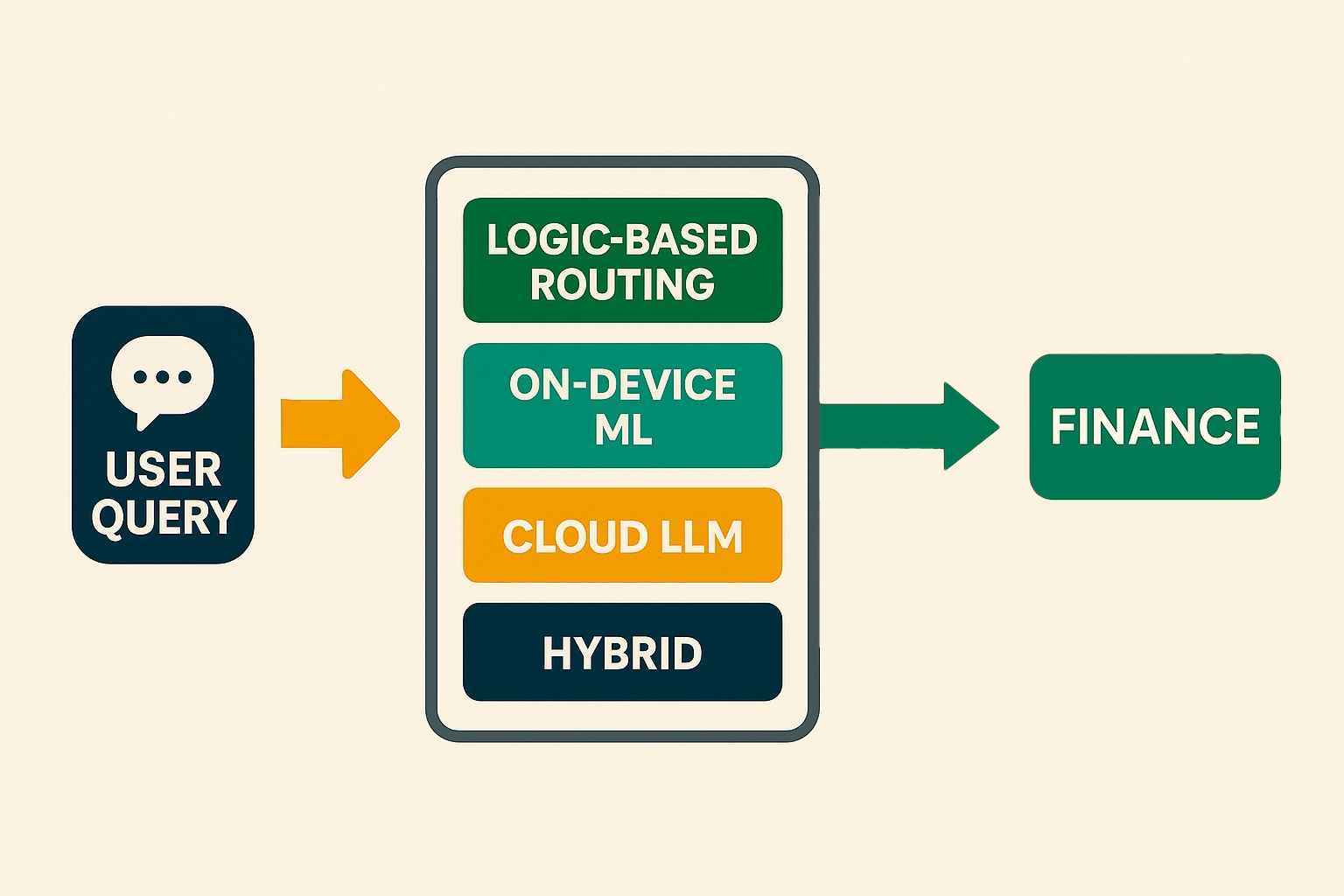

MoE systems use a kind of switchboard, determining what a particular query is about and which of its subnetworks are the best “experts” to respond to it. Criteria could be based on many factors, from what gives the highest quality response to what gives the most efficient or cheap response. Not every query needs a cannon; many only need a feather, and most lie somewhere in between. MoE systems are trained to make that determination, but developers can do this too, and be far more precise in their decision-making in most cases.

Determining which direction to turn, aka the “domain” of the query, is a powerful capability to have. By intelligently dispatching each query to the best-suited “expert,” you simultaneously optimize for cost, speed, privacy, and quality—all from a single entry point.

Why this matters

Cost efficiency

Route simple or high-volume queries through logic-based or on-device ML paths to avoid unnecessary cloud LLM calls and keep API bills in check.

Latency reduction

Handle real-time or interactive prompts locally (via logic-based routing or ML classification) for sub-second responses, reserving cloud services for more complex work.

Privacy and security

Keep PII and sensitive data on-device or enforce it locally via business rules, reducing the risk of exposure when you don’t need a cloud round-trip.

Robust accuracy

Cross-validate ambiguous queries using dual-model or hybrid routing, boosting confidence and reducing misclassifications.

This is not only important for products like our smartphones–which hold our most private information–but also critical for businesses wanting to build AI features under strict security or compliance requirements.

The four pillars of AuroraToolkit routing

With the latest release of the AuroraToolkit core library for iOS/Mac, there are now four options for domain routing to choose from covering most potential use cases. Based on the LLMDomainRouterProtocol, the library now features:

- LLMDomainRouter: a simple, LLM-based classification router

- CoreMLDomainRouter: private, offline classification router

- LogicDomainRouter: pure logic-based router, e.g. catch email addresses, credit card numbers, and SSNs instantly via regex

- DualDomainRouter: combines two contrastive routers and confidence logic to resolve conflicts

These four paradigms–cloud-based LLM, on-device ML, logic-based routing, and hybrid ensemble–cover the vast majority of real-world needs. Even more custom routing strategies can be designed by easily extending LLMDomainRouterProtocol or its extension ConfidentDomainRouter.

In the next article, we’ll take a deep dive into domain classification strategies for routing to multiple models:

- Logic-based

- ML-based

- LLM-based

- Ensemble hybrid