-

Domain classification strategies for routing to multiple models

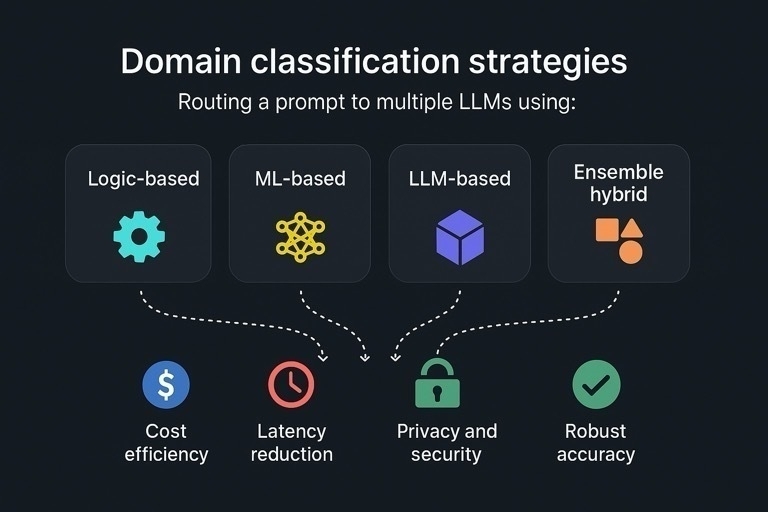

In our first article on routing as an AI developer’s superpower, we introduced multiple strategies for domain classification that can be used by a Large Language Model (LLM) orchestrator to route a prompt to one of multiple large language models.

When you’re working with a single LLM, domain classification is often unnecessary—unless you want to pre-process prompts for efficiency, cost control, or to enforce specific business logic. These techniques may still be useful in such a scenario, but today we’re focusing on multi-model use cases.

While many strategies exist, Aurora Toolkit focuses on four key classification strategies:

- Logic-based

- ML-based

- LLM-based

- Ensemble hybrid

Domain classification strategies

Using domain classification for routing strategy matters for:

- Cost efficiency

- Latency reduction

- Privacy and security

- Robust accuracy

Logic-based

Using in-app deterministic logic to determine the domain of a prompt is the fastest way to classify it on-device. Your logic could be as simple as estimating the number of tokens in a prompt, or as complex as switching logic that evaluates multiple conditions. It could also be a regex-based check for SSNs or credit card numbers to decide whether the prompt should be evaluated locally or in the cloud.

Logic-based classification often gives you high cost efficiency, latency reduction, and privacy and security. It may give you accuracy, for example matching some standard formats like credit card numbers, but that is not one of its core strengths.

For this technique, Aurora Toolkit provides LogicDomainRouter.

ML-based

Modern operating systems typically provide built-in support for running Machine Learning (ML) models on-device. Android and iOS/macOS platforms, in particular, offer powerful native frameworks—LiteRT (Google’s rebranded successor to TensorFlow Lite) and CoreML—which allow developers to train or fine-tune models using open-source tools like PyTorch and run them on-device with hardware acceleration. ML models are significantly smaller than LLMs, making them fast and easy to integrate into mobile and desktop applications.

ML-based classification gives you latency reduction, privacy and security, cost effiency, and depending on the quality of your models, may also offer strong accuracy when well-trained.

For this technique, Aurora Toolkit provides CoreMLDomainRouter.

LLM-based

In late 2022, LLMs were compelling. By 2023, their capabilities had grown so quickly that entirely new businesses were being built on top of them. In 2024, the conversation shifted—questions about AI’s impact on the job market started to get serious. And in 2025, we’re seeing it play out in real time, with memos from companies like Shopify and Duolingo indicating they’re now “AI-first” when it comes to hiring.

The point is: Large Language Models are now among the most capable classifiers available—often exceeding human-level performance for many tasks.

LLM-based classification gives you robust accuracy, and varying levels of cost efficiency, latency reduction, privacy, and security.

For this technique, Aurora Toolkit provides LLMDomainRouter.

Ensemble hybrid

While the most capable general classifiers are LLMs, they are frequently not cost-effective for such a task. Using an LLM to classify a prompt—only to then pass it to another LLM for processing—can introduce unnecessary latency and cost.

A great way to get better, more balanced results from all the previous strategies is to build an ensemble router using multiple techniques. For example, a logic-based router can be combined with an ML-based router to take your overall accuracy percentage from the 80s to the mid-90s. At scale, this could save thousands of dollars or more—especially when routing avoids your most expensive fallback LLM.

Ensemble-based classification with a highly-capable fallback option gives you the best balance of cost efficiency, latency reduction, privacy and security, and robust accuracy.

For this technique, Aurora Toolkit provides a confidence-based DualDomainRouter.

In the next article, we’ll take a deep dive into several use cases based on these domain routing strategies:

- PII filtering

- Cost and latency benchmarks

- On-device vs. cloud tradeoffs

- Building custom routers

-

Software development in the AI era - are programming languages almost over?

For the longest time I’ve put off adding support for Google Gemini in Aurora Toolkit, because Google’s API was more complicated than others based on OpenAI’s API design, and I wanted to move on to various feature ideas I had like implementing workflows and agents. I figured eventually I’d come back around to Gemini support when I needed it, or someone else would clone the project and add it themselves.

With all the talk about Gemini 2.5 Pro exp being “the world’s best coding model”, I tried using it to see what it can do.

Giving it access to the AuroraLLM module, so it could understand the structs, protocols, and how the Anthropic, OpenAI, and Ollama implementations worked, I asked Gemini to write a GoogleService implementation to match.

I don’t know whether to be thrilled or terrified, but it nailed a 99.9% working implementation in one shot, with the most minor of manual corrections afterward – mainly from it guessing how to use the debug logging which is defined in the AuroraCore module that I didn’t provide. So much so that after testing it with some of the practical examples in the toolkit, the commit message I wrote simply says:

- Note: Written by Gemini 2.5 Pro experimental, with some minor editsWhile a lot of the toolkit has been written with AI as my assistant/coding partner, I drove its development, made changes, questioned ideas, refactored, and re-shared with ChatGPT to make sure it understood what I was doing and what I wanted help with. In this case, it’s mostly customization based on previous code. So, still not vibe-coding, but close enough to see the possibilities. It casts recent comments by AI industry leaders like Sam Altman and Dario Amodei that AI will be writing nearly all code by the end of this year in a different light to me.

Knowing that the major players will need to one-up Google, this is all but certain to come true – if not the end of 2025, certainly by the end of 2026. For now, and the foreseeable future of software development, one of the best skills we can build is working efficiently with AI tools.

We’re probably on the cusp of a decline of programming languages designed for humans.

I expect hardware capabilities to become much more important than software languages designed to use them. When an AI can understand all the capabilities available to it, it can effectively write its own custom language as needed based on what you ask it to do with them.