-

Introducing Aurora Toolkit 1.0

When creating something new throughout human history, there is always that moment where the idea touches the medium, and an abstract thought starts to become a tangible thing. A stick starts a line in the dirt. A pen starts a stroke on a page. A brush starts to stain a canvas. A bit starts to bite into a piece of wood. A chisel starts to reveal a masterpiece.

In our modern software development process there is an initial keystroke, but the first tangible evidence of an idea made real has a standard name: “Initial commit”. Version control systems before git had a concept of a commit, but it’s hard to imagine anyone using tools like SVN, CVS, or RCS today. So with most modern projects, it’s very easy to scroll all the way back to the initial commit.

Yesterday, after 446 days, I published version 1.0 of Aurora Toolkit – my open source Swift package for integrating AI and ML workflows in apps on Apple platforms. I know it took almost 15 months because I went back to my initial commit to see how I imagined the project from its beginning. I was surprised and delighted to see that all the core elements were in the initial idea.

The Apache License was there from the start, so it was always going to be open source. It had context management, tasks and workflows, and an LLM manager allowing you to mix, match, and combine one or more different AI and ML models (or different instances of the same model!) to create something like a mixture of experts super model for your iOS or Mac app. The one thing missing was an implementation of an actual AI model, but that would come about a week later. Figuring out how tasks and workflows worked was on my mind, and they remain two of the core features of the project today.

Features of Aurora Toolkit 1.0

- Modular Architecture: Organized into distinct modules (Core, LLM, ML, Task Library) for flexibility

- Declarative Workflows: Define workflows declaratively, similar to SwiftUI, for clear task orchestration

- Multi-LLM Support: Unified interface for Anthropic, Google, OpenAI, Ollama, and Apple Foundation Models

- On-device ML: Native support for classification, embeddings, semantic search, and more using Core ML

- Intelligent Routing: Domain-based routing to automatically select the best LLM service for each request

- Convenience APIs: Simplified top-level APIs (LLM.send(), ML.classify(), etc.) for common operations

Examples

Aurora Toolkit also includes several comprehensive examples demonstrating multiple use cases.

In one example, Siri-style routing is used, where clearly public queries are routed to powerful network LLM models like Anthropic, OpenAI, or Google, but obviously and potentially private queries stay on device.

In other examples, workflow tasks are used to fetch information from the Internet like news articles or App Store reviews, then processed, summarized, and packaged into more useful information, insights, and formats using AI.

There is even an example of two different AI models having a productive conversation about a topic – something nobody wants to listen to, but a simple and cool demonstration nevertheless.

What’s Next

My initial idea that ultimately led to this project was I wanted something to watch the git folder I was working in, and automatically generating commit message details any time the folder changed. I was working on my other project, Hamster Soup, and when I got ready to do a commit, I would copy the diffs and paste them into ChatGPT and ask for a concise git description to use for my commit. I figured I could make a widget that did that for me, and I’d just click it to copy the message to the clipboard. Somehow it became all this, waves hands around.

I still have yet to create that widget.

In the meantime, I have another project I’ve been working on, but I also plan to start a series of articles about Aurora Toolkit, how to use it, and what you can build with it. Look for that sooner rather than later, and who knows, maybe one of those articles will be how I built that git commit widget with full code included.

Compute!’s Gazette, the magazine whose type-in programs is how I actually learned to program back in the 80’s, is back after all. Maybe I’ll channel my childhood and do that article Gazette-style. :)

-

Domain classification strategies for routing to multiple models

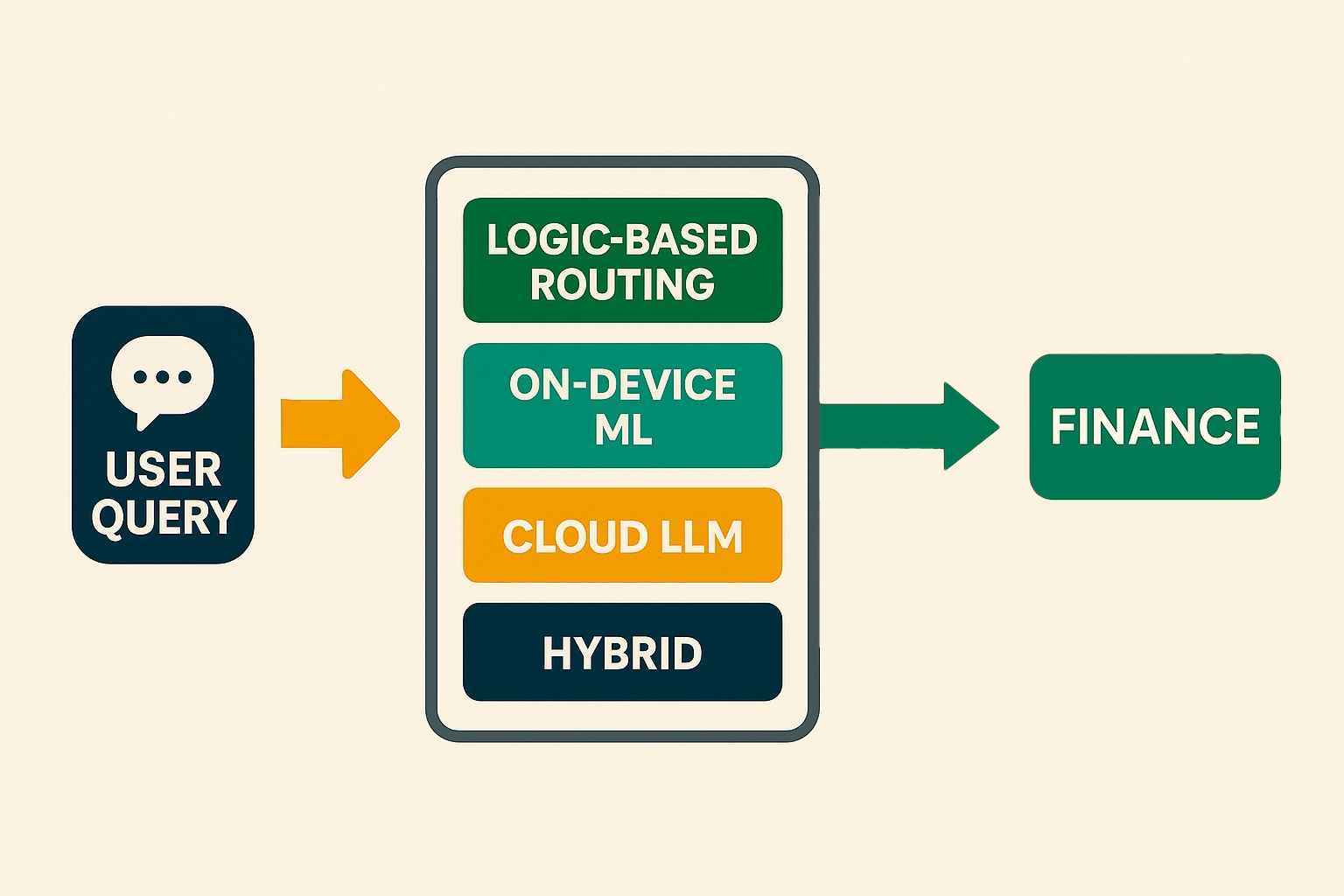

In our first article on routing as an AI developer’s superpower, we introduced multiple strategies for domain classification that can be used by a Large Language Model (LLM) orchestrator to route a prompt to one of multiple large language models.

When you’re working with a single LLM, domain classification is often unnecessary—unless you want to pre-process prompts for efficiency, cost control, or to enforce specific business logic. These techniques may still be useful in such a scenario, but today we’re focusing on multi-model use cases.

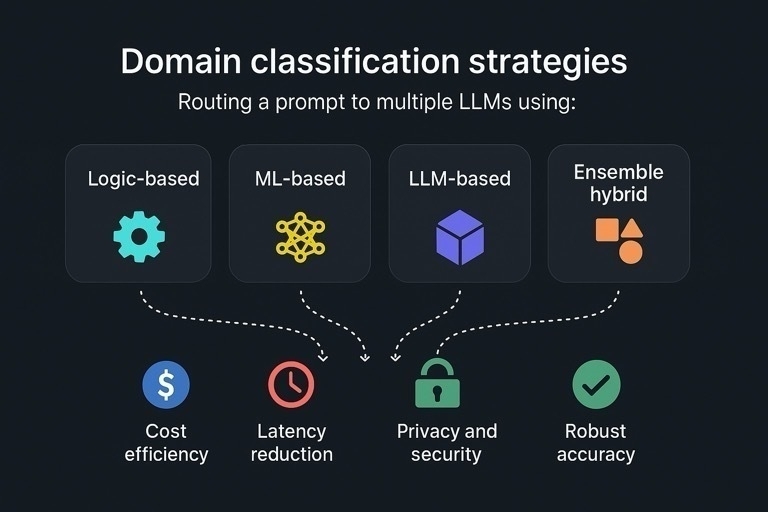

While many strategies exist, Aurora Toolkit focuses on four key classification strategies:

- Logic-based

- ML-based

- LLM-based

- Ensemble hybrid

Domain classification strategies

Using domain classification for routing strategy matters for:

- Cost efficiency

- Latency reduction

- Privacy and security

- Robust accuracy

Logic-based

Using in-app deterministic logic to determine the domain of a prompt is the fastest way to classify it on-device. Your logic could be as simple as estimating the number of tokens in a prompt, or as complex as switching logic that evaluates multiple conditions. It could also be a regex-based check for SSNs or credit card numbers to decide whether the prompt should be evaluated locally or in the cloud.

Logic-based classification often gives you high cost efficiency, latency reduction, and privacy and security. It may give you accuracy, for example matching some standard formats like credit card numbers, but that is not one of its core strengths.

For this technique, Aurora Toolkit provides LogicDomainRouter.

ML-based

Modern operating systems typically provide built-in support for running Machine Learning (ML) models on-device. Android and iOS/macOS platforms, in particular, offer powerful native frameworks—LiteRT (Google’s rebranded successor to TensorFlow Lite) and CoreML—which allow developers to train or fine-tune models using open-source tools like PyTorch and run them on-device with hardware acceleration. ML models are significantly smaller than LLMs, making them fast and easy to integrate into mobile and desktop applications.

ML-based classification gives you latency reduction, privacy and security, cost effiency, and depending on the quality of your models, may also offer strong accuracy when well-trained.

For this technique, Aurora Toolkit provides CoreMLDomainRouter.

LLM-based

In late 2022, LLMs were compelling. By 2023, their capabilities had grown so quickly that entirely new businesses were being built on top of them. In 2024, the conversation shifted—questions about AI’s impact on the job market started to get serious. And in 2025, we’re seeing it play out in real time, with memos from companies like Shopify and Duolingo indicating they’re now “AI-first” when it comes to hiring.

The point is: Large Language Models are now among the most capable classifiers available—often exceeding human-level performance for many tasks.

LLM-based classification gives you robust accuracy, and varying levels of cost efficiency, latency reduction, privacy, and security.

For this technique, Aurora Toolkit provides LLMDomainRouter.

Ensemble hybrid

While the most capable general classifiers are LLMs, they are frequently not cost-effective for such a task. Using an LLM to classify a prompt—only to then pass it to another LLM for processing—can introduce unnecessary latency and cost.

A great way to get better, more balanced results from all the previous strategies is to build an ensemble router using multiple techniques. For example, a logic-based router can be combined with an ML-based router to take your overall accuracy percentage from the 80s to the mid-90s. At scale, this could save thousands of dollars or more—especially when routing avoids your most expensive fallback LLM.

Ensemble-based classification with a highly-capable fallback option gives you the best balance of cost efficiency, latency reduction, privacy and security, and robust accuracy.

For this technique, Aurora Toolkit provides a confidence-based DualDomainRouter.

In the next article, we’ll take a deep dive into several use cases based on these domain routing strategies:

- PII filtering

- Cost and latency benchmarks

- On-device vs. cloud tradeoffs

- Building custom routers

-

Domain Routing: the AI developer's superpower

Since OpenAI released ChatGPT more than two years ago, Artificial Intelligence has advanced at an accelerating pace in capability, complexity, and quality. Equally dizzying is the number of options to harness AI–thousands of open-source LLaMA models plus powerful closed models from Anthropic, google, and OpenAI.

Many teams pick a single “best” model at first, then periodically re-evaluate as requirements change. The savviest companies and developers make use of multiple models for various use cases, suggesting the best AI would be a combination of any number of AIs. You just need a system to quickly determine which AI to consult at any given moment. This is the underlying principle of Mixture-of-Expert (MoE) large language models.

MoE systems use a kind of switchboard, determining what a particular query is about and which of its subnetworks are the best “experts” to respond to it. Criteria could be based on many factors, from what gives the highest quality response to what gives the most efficient or cheap response. Not every query needs a cannon; many only need a feather, and most lie somewhere in between. MoE systems are trained to make that determination, but developers can do this too, and be far more precise in their decision-making in most cases.

Determining which direction to turn, aka the “domain” of the query, is a powerful capability to have. By intelligently dispatching each query to the best-suited “expert,” you simultaneously optimize for cost, speed, privacy, and quality—all from a single entry point.

Why this matters

Cost efficiency

Route simple or high-volume queries through logic-based or on-device ML paths to avoid unnecessary cloud LLM calls and keep API bills in check.Latency reduction

Handle real-time or interactive prompts locally (via logic-based routing or ML classification) for sub-second responses, reserving cloud services for more complex work.Privacy and security

Keep PII and sensitive data on-device or enforce it locally via business rules, reducing the risk of exposure when you don’t need a cloud round-trip.Robust accuracy

Cross-validate ambiguous queries using dual-model or hybrid routing, boosting confidence and reducing misclassifications.This is not only important for products like our smartphones–which hold our most private information–but also critical for businesses wanting to build AI features under strict security or compliance requirements.

The four pillars of AuroraToolkit routing

With the latest release of the AuroraToolkit core library for iOS/Mac, there are now four options for domain routing to choose from covering most potential use cases. Based on the

LLMDomainRouterProtocol, the library now features:- LLMDomainRouter: a simple, LLM-based classification router

- CoreMLDomainRouter: private, offline classification router

- LogicDomainRouter: pure logic-based router, e.g. catch email addresses, credit card numbers, and SSNs instantly via regex

- DualDomainRouter: combines two contrastive routers and confidence logic to resolve conflicts

These four paradigms–cloud-based LLM, on-device ML, logic-based routing, and hybrid ensemble–cover the vast majority of real-world needs. Even more custom routing strategies can be designed by easily extending

LLMDomainRouterProtocolor its extensionConfidentDomainRouter.

In the next article, we’ll take a deep dive into domain classification strategies for routing to multiple models:

- Logic-based

- ML-based

- LLM-based

- Ensemble hybrid

-

Introducing Aurora Toolkit: Simple AI Integration for iOS and Mac Projects

Over the summer of 2024, I conducted an experiment to see if I could use Artificial Intelligence to do in a month what took several in previous years, which is to rewrite my long-running Big Brother superfan app to incorporate the latest technologies. I’ve been shipping this app since the App Store opened and every 2-3 years would rewrite it from scratch, to use the latest frameworks and explore fresh ideas for design, user experience, and content. This year, the big feature was adding AI and it seemed only natural to use AI extensively to do so – that was the experiment. The results blew my mind.

Not only was AI the productivity accelerator we’ve all been promised, but it invigorated and filled my well of ideas for what you can do with app development. As the show wound down for this year (Big Brother is a summer TV show), I had gained both a ton of experience and perspective developing with AI and ideas for how I could make this easier for others wanting to integrate it.

So even before Big Brother aired its finale, I already moved on to my next project, which became Aurora Toolkit, and the first major component of it, AuroraCore. AuroraCore is a Swift Package, and the foundational library of Aurora Toolkit.

I originally envisioned Aurora as an AI assistant, something that lived in the menu bar, or a widget, or some other quick-to-access space. AuroraCore was to be the foundation of the assistant app, but the more I developed my ideas for it, it I realized it was really a framework for any developer building any kind of app for iOS/Mac. So the AI assistant became a Swift Package for anyone to use instead.

My goals for the package:

- Written in Swift with no external dependencies outside of what comes with Swift/iOS/MacOS, making it portable to other languages and platforms

- Lightweight and fast

- Support all the major AI platforms like those from OpenAI, Anthropic, and Google

- Support open source models via Ollama, the leading platform for OSS AI models

- Include support for multiple LLMs at once

- Include some kind of workflow system where AI tasks could be chained together easily

When I use ChatGPT I treat it like Slack with access to multiple developers to collaborate with. I use multiple chat conversations, each fulfilling different roles. One of those conversations is solely for generating git commit descriptions. I gave it my preferred format for commit descriptions, and when I’m ready to make a commit, I simply paste in the diffs and ask, “Give me a commit description in my preferred format using these diffs”.

My initial use case for AuroraCore was to replicate that part of my workflow while developing my Big Brother app. I envisioned a system that watches my git folder for changes, detects updates, and uses a local llama model to generate commit descriptions in my ideal format—continuously. The goal was to streamline the process, so I could copy and paste the description whenever I was ready to commit. Ideally, there would even be a big button I could press to handle it all on demand.

I haven’t actually built that yet, but I built a framework that would make it possible. Also, ChatGPT significantly outperforms llama3 at writing good commit descriptions. I’ve got a lot more prompt work to do on that one, I’m afraid.

But approaching it with these goals and this particular use case helped me press forward down what I feel are some really productive paths. There are three major features of AuroraCore so far that I’m especially excited about and proud of.

Support for multiple Large Language Models at once

From the start, I wanted to use multiple active models, and a way to feed AI prompts to each. I envisioned mixing Anthropic Claude with OpenAI GPT, and one or more open source models via Ollama. The

LLMManagerin AuroraCore can support as many models as you want. Under the hood, they can all be the same claude, gpt-4o, llama3, gemma2, mistral, etc. models, but each can have its own setup, including token limits and system prompt. LLMManager can handle a whole team of models with ease, and understands which models have what token limits and even supported domains, and send appropriate requests to the appropriate models.Automatic domain routing

Once I started exploring using multiple models each with different domains, I made an example using a small model to review a prompt and spit out the most appropriate domain that matched a list of domains I gave it. Then I had models set up for each of those domains, so that

LLMManagerwould use the right model to respond. It became obvious that this shouldn’t be an example, but built in behavior inLLMManager, so first I added support for a model that was consulted first for domain routing. That led to creating a first class objectLLMDomainRouter, that takes an LLM service model, a list of supported domains, and a customizable set of instructions with a reasonable default. When you route a request through theLLMManager, it will consult with the domain router, then send the request to the appropriate model. The domain router is as fast as whatever model you give it to work with, and you can essentially build your very own “mixture of experts” simply by setting up several models with high quality prompts tailored to different domains.Declarative workflows with tasks and task groups

A robust workflow system was a key goal for this project, enabling developers to chain multiple tasks, including AI-based actions. Each task can produce outputs, which feed into subsequent tasks as inputs. Inspired by the Apple Shortcuts app (originally Workflow), I envisioned AuroraCore workflows providing similar flexibility for developers.

The initial version of Workflow was a simple system that took an array of WorkflowTask classes, and tied the input mappings together with another dictionary referencing different tasks by name. I quietly made AuroraCore public with that, but what I really wanted was a declarative system similar to SwiftUI. It took a few more days, but

Workflowhas been refactored to that declarative system, with an easier way to map values between tasks and task groups.The

TVScriptWorkflowExamplein the repository demonstrates a workflow with tasks that fetch an RSS feed from Associated Press’s Technology news, parse the feed, limit it to the 10 most recent articles, fetch those articles, extract summaries, and then use AI to generate a TV news script. The script includes 2-3 anchors, studio directions, and that familiar local TV news banter we all know and love—or cringe at. It even wraps up on a lighter note, because even the most intense news programs like to end with something uplifting to make viewers smile. The entire process takes about 30-40 seconds to complete, and I’ve been amazed by how realistic the dozens of test runs have sounded.What’s next

So that’s Aurora Toolkit so far. There are no actual tools in the kit yet, just this foundational library. Other than a simple client I wrote to test a lot of the functionality, no apps currently use this. My Big Brother app will certainly use it starting next year, but in the meantime, I’d love to see what other developers make of it. If someone can help add multimodal support or integrate small on-device LLMs to take advantage of all these neural cores our phones are packing these days—even better.

AuroraCore is backed by a lot of unit tests, but probably not enough. It feels solid, but it is still tagged as prerelease software for now so YMMV. If you try this Swift package out, I’d love to know what you think!

GitHub link: https://github.com/AuroraToolkit/AuroraCore